Table of Contents

In the dynamic landscape of web development, one question remains a constant: how can we build web applications that not only meet today’s demands but also scale seamlessly with tomorrow’s growth? The answer lies in understanding and implementing modern architectural principles and development best practices. Welcome to ‘Building Scalable Web Apps: A Guide to Modern Architecture and Best Practices’, your comprehensive roadmap to crafting robust, efficient, and scalable web applications.

Let’s start with a staggering fact: according to a 2021 report by Statista, the global web application market is projected to reach a market size of over $130 billion by 2026, growing at a CAGR of 11.8% during the forecast period. This exponential growth underscores the critical need for web applications that can scale, adapt, and perform under pressure. But how do we achieve this? The secret lies in embracing modern architectural patterns and adhering to development best practices.

You might be thinking, ‘That sounds great, but where do I start?’ or perhaps, ‘I’ve tried scaling my apps before, but it’s been a struggle.’ Rest assured, you’re not alone. Scaling web applications is a complex task that requires a deep understanding of various architectural patterns, tools, and methodologies. But fear not, for this article is designed to be your guiding light in this journey.

In this comprehensive guide, we agree that scaling web applications is a challenge, but we promise to equip you with the knowledge and tools necessary to overcome this hurdle. We’ll delve into the intricacies of modern architectural patterns like Microservices, Serverless, and Event-Driven Architecture, explaining their benefits and use cases. We’ll also explore development best practices, from version control and continuous integration to load balancing and caching strategies.

By the end of this article, you’ll have a clear understanding of how to design, develop, and deploy scalable web applications. You’ll learn how to identify bottlenecks, optimize performance, and ensure high availability. Whether you’re a seasoned developer looking to refine your skills or a newcomer eager to learn, this guide is your key to unlocking the world of scalable web applications.

So, buckle up and get ready to embark on an exciting journey. Let’s dive into the fascinating world of modern architecture and best practices, and together, we’ll build web applications that are not just for today, but for tomorrow as well.

Mastering the Art of Scalability: From Architecture to Development Best Practices

In the dynamic world of software development, scalability is not merely an option, but a necessity. It’s the art of designing systems that can grow and adapt, handling increased load and user base without breaking a sweat. ‘Mastering the Art of Scalability’ is a journey that begins with architecture, the blueprint of your software’s future growth. It’s about understanding the principles of distributed systems, the wisdom of microservices, and the beauty of horizontal scaling. But it’s not just about the big picture; it’s also about the details. It’s about writing efficient code, optimizing databases, and leveraging caching mechanisms. It’s about understanding your system’s bottlenecks and knowing when to scale up or out. It’s about embracing DevOps practices, automating deployment, and ensuring high availability. It’s about monitoring, logging, and reacting to issues before they become disasters. It’s about continuous improvement, always striving to make your system faster, stronger, and more resilient. In essence, mastering the art of scalability is about turning your software into a well-oiled machine that can handle anything you, or the market, throws at it. It’s a challenge, but it’s also a rewarding journey that turns developers into true system architects.

Understanding Scalability: Why and When to Scale

In the dynamic world of web applications, understanding scalability is not just an advantage, but a necessity. Scalability, in this context, refers to the ability of a system, network, or process to handle a growing amount of work or its potential to be enlarged to accommodate that growth. It’s about ensuring that your web application can manage increased user load, data, and complexity without compromising performance or reliability.

Imagine your web application as a bustling restaurant. When it first opens, a small team can handle the initial flow of customers. However, as word spreads and popularity grows, you need to scale

- either by adding more staff (vertical scaling) or opening more branches (horizontal scaling). The same principle applies to web applications. As traffic increases, you need to scale to meet the demand.

Vertical scaling, also known as ‘scale up’, involves adding more resources to an existing server. This could mean upgrading the server’s RAM, CPU, or storage. It’s like promoting a waiter to a manager

- they can handle more tasks at once. However, there’s a limit to how much you can upgrade a single server, and it can be expensive.

Horizontal scaling, or ‘scale out’, involves adding more servers to your infrastructure. Each server handles a part of the workload. It’s like opening a new branch of your restaurant. It’s more flexible and cost-effective, as you can add or remove servers as needed. However, it requires careful management to ensure all servers work together seamlessly.

So, when is it necessary to scale? The answer lies in your application’s growth trajectory. If you’re expecting a sudden increase in traffic, like a viral marketing campaign, you might need to scale horizontally beforehand. If you’re experiencing consistent growth, a combination of both might be necessary. The key is to monitor your application’s performance and user load, and scale accordingly. After all, a successful web application is one that can grow with its users.

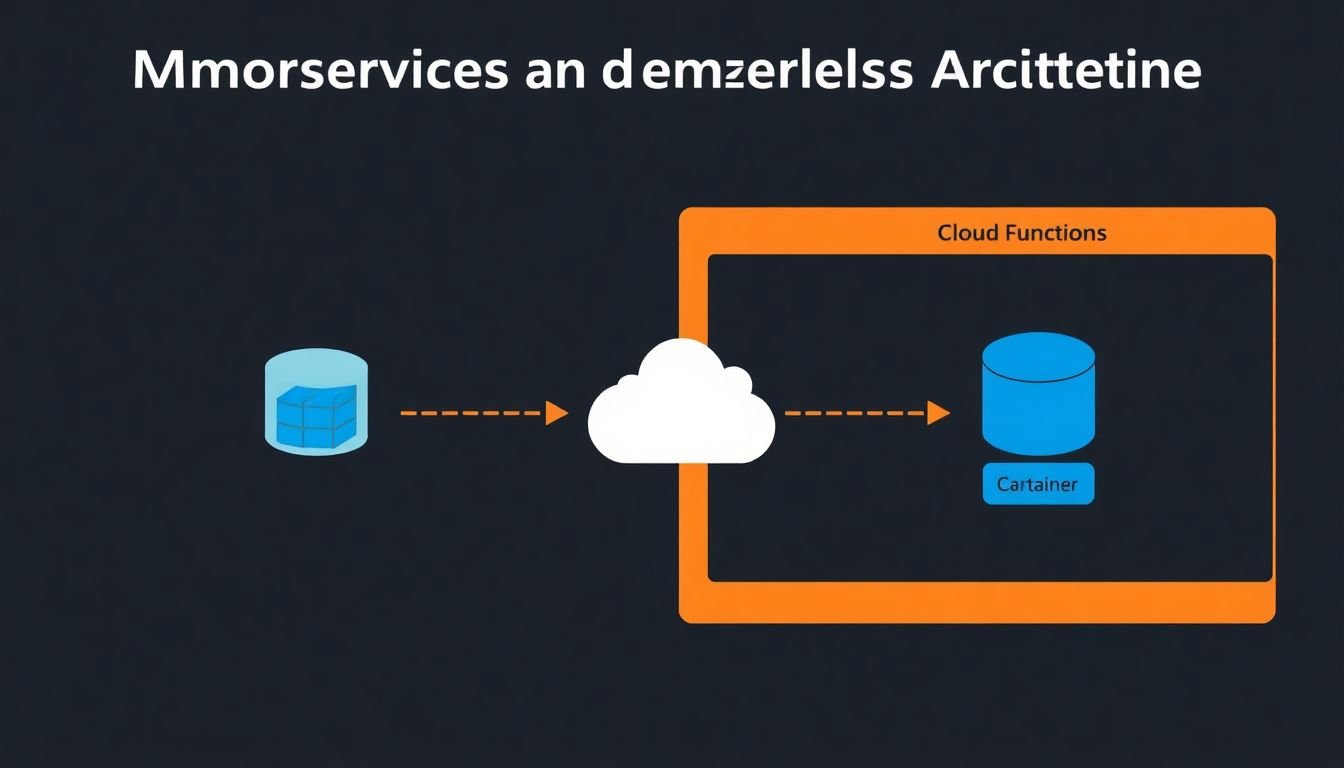

Modern Architecture: Microservices and Serverless

In the dynamic landscape of modern software development, two architectural patterns have emerged as game-changers: Microservices and Serverless. Both approaches aim to enhance scalability, flexibility, and efficiency, but they cater to different needs and have unique characteristics. Microservices, an evolution of the monolithic architecture, breaks down an application into a suite of small, independent services, each running in its own process and communicating with lightweight mechanisms, often an HTTP API. Each service is built around a specific business capability and can be developed, deployed, and scaled independently. This modular approach offers several benefits. Firstly, it enables continuous delivery and deployment, allowing teams to work on individual services without impacting the entire system. Secondly, it promotes fault isolation; if one service fails, it doesn’t necessarily bring down the entire application. Lastly, it facilitates technology diversity, as each service can be developed using the best tool for the job. However, microservices also present challenges. They introduce complexity in data management, as services need to share data without compromising their independence. They also require robust service discovery and load balancing mechanisms. Moreover, testing and monitoring become more complex in a distributed system. Serverless architecture, on the other hand, takes a different approach. It shifts the operational responsibility from the developer to the cloud provider. In a serverless model, you write code without worrying about servers, allowing you to focus solely on the business logic. This approach is particularly useful for event-driven applications and applications with variable traffic. It offers automatic scaling, reducing the need for capacity planning, and you only pay for the compute time you use, making it cost-effective. However, serverless architectures also have their challenges. They can introduce cold start issues, where there’s a delay in executing code after a period of inactivity. They also have limitations in terms of control and customization, as you’re relying on the cloud provider’s services. The choice between microservices and serverless depends on your specific needs. Microservices are ideal for large, complex applications that require high scalability and flexibility. Serverless, however, is perfect for applications with variable traffic or for teams that want to offload operational responsibilities to the cloud provider. In some cases, a hybrid approach, combining the benefits of both, might be the best solution.

Designing for Scalability: Caching and CDNs

In the dynamic landscape of web applications, scalability is not just a feature, but a necessity. Two powerful tools that enable this scalability are caching and Content Delivery Networks (CDNs). Let’s delve into their roles and strategies for efficient use.

Caching is akin to a smart librarian in a bustling library. It stores frequently accessed data, reducing the time and resources needed to retrieve it. This is particularly useful for static content like images, videos, and stylesheets. The first step in efficient caching is identifying what can be cached. This includes content that doesn’t change frequently and data that’s expensive to generate. Once identified, implement a caching policy that suits your application’s needs. For instance, you might use a time-based policy for images, or a version-based policy for CSS files.

Content Delivery Networks, or CDNs, are like a network of libraries, each with a copy of the most popular books. They distribute content across multiple servers worldwide, reducing the distance data has to travel to reach users. This not only improves load times but also ensures high availability and fault tolerance. When a user requests content, the CDN routes them to the nearest server, minimizing latency. To leverage CDNs effectively, you should choose a reliable provider, ensure your content is properly configured for the CDN, and monitor performance to identify any bottlenecks.

Both caching and CDNs offer significant benefits. They reduce server load, improving application performance and reliability. They also lower bandwidth costs, as less data needs to be transferred. Moreover, they enhance the user experience by delivering content quickly and consistently. In essence, they are the unsung heroes of scalable web applications, working tirelessly behind the scenes to ensure smooth operation.

Database Design for Scalability

Designing databases for scalability is a critical aspect of building robust, high-traffic web applications. Traditional relational databases, while powerful, can struggle with the demands of modern web applications, leading to the rise of NoSQL databases. NoSQL databases, such as MongoDB and Cassandra, offer flexible data models and horizontal scalability, making them ideal for handling large volumes of data and high user loads.

One key strategy for achieving scalability is through data partitioning, or sharding. Sharding involves splitting a large dataset into smaller, more manageable parts, each stored in a separate database instance. This allows for parallel processing and improved performance. For example, in a user-based application, data could be sharded based on user ID, with each shard handling a range of IDs. However, sharding also introduces complexity, as it requires careful coordination between shards to maintain data consistency.

Another crucial aspect of scalable database design is replication. Database replication involves maintaining copies of data on multiple servers, providing redundancy and improving read performance. In case of a server failure, traffic can be seamlessly routed to a replica. Replication can be synchronous, where every write operation is replicated immediately, or asynchronous, where replicas are updated periodically. Both approaches have their trade-offs, and the choice depends on the specific requirements of the application.

In conclusion, designing databases for scalability involves a combination of strategies, including the use of NoSQL databases, sharding, and replication. Each of these techniques has its own complexities and trade-offs, and the best approach depends on the specific needs and constraints of the application. By carefully considering these factors, developers can build databases that can handle the demands of even the most challenging web applications.

Load Balancing: Ensuring High Availability

In the dynamic landscape of web applications, ensuring high availability and optimal performance is not just a goal, but a necessity. This is where the concept of load balancing comes into play, acting as the unsung hero that keeps your web applications running smoothly even under heavy traffic. Load balancing is the process of distributing network or application traffic across multiple servers to ensure no single server bears too much load, thereby preventing it from becoming a bottleneck or failing under high demand.

Understanding the importance of load balancing is like grasping the significance of a well-oiled machine. It ensures that your web application remains available and responsive, providing a seamless user experience. Moreover, it enhances fault tolerance, as if one server fails, others can pick up the slack. It also allows for easy scalability, as you can simply add more servers to the pool to handle increased traffic.

Now, let’s delve into the different load balancing algorithms, each with its own set of pros and cons.

- Round Robin: This algorithm distributes incoming requests sequentially among the servers. It’s simple, easy to implement, and ensures that each server gets an equal share of the load. However, it doesn’t take into account the server’s current load or processing power, which could lead to some servers being overloaded while others are underutilized.

- Least Connections: This algorithm sends requests to the server with the fewest active connections. It’s efficient in handling sudden traffic spikes and ensures that no single server gets overwhelmed. However, it might not be the best choice for applications that require long-lived connections.

- IP Hash: This algorithm uses the client’s IP address to determine which server should handle the request. It ensures that all requests from a particular client go to the same server, which is beneficial for maintaining session state. However, it can lead to hotspots if a single IP address sends a lot of requests.

- Consistent Hashing: This algorithm uses a hash function to distribute requests across servers. It’s efficient and minimizes the impact of adding or removing servers. However, it can be complex to implement and may not be suitable for all types of applications.

Each of these algorithms has its use cases, and the choice depends on the specific needs and architecture of your web application. However, the underlying goal remains the same: to ensure high availability and optimal performance, making load balancing an indispensable tool in the toolbox of any web developer or system administrator.

Auto-Scaling: Automating Scalability

Auto-scaling, a dynamic and intelligent approach to resource management, is a game-changer in today’s cloud-centric world. It’s like having a personal, always-on, and always-ready IT assistant that ensures your applications run smoothly, even under the heaviest loads. But what exactly is auto-scaling, and how does it work its magic?Auto-scaling is a feature that automatically adjusts the number of instances or resources in a cloud environment based on predefined rules or real-time demand. It’s like having a magic hat that pulls out more rabbits (resources) when you need them, and puts them back when you don’t. The benefits are manifold: it ensures high availability and fault tolerance, optimizes resource utilization, and can significantly reduce costs by scaling down during periods of low demand.Implementing auto-scaling involves a few key steps, and the process varies slightly depending on the cloud environment you’re using. Let’s take a look at how it’s done in some popular cloud platforms.In Amazon Web Services (AWS), auto-scaling is implemented using Auto Scaling groups. These groups monitor applications and automatically adjust capacity to maintain steady, predictable performance at the lowest possible cost. The metrics used for auto-scaling decisions in AWS include CPU utilization, network throughput, and request count, among others. To implement auto-scaling in AWS, you would first create an Auto Scaling group, define the launch template or launch configuration, and then set up the scaling policies or rules based on the desired metrics.Microsoft Azure also offers auto-scaling capabilities through its Virtual Machine Scale Sets (VMSS) and App Service. In Azure, auto-scaling is based on metrics like CPU percentage, disk queue length, and network in/out, among others. To implement auto-scaling in Azure, you would create a scale set, define the scaling rules based on the desired metrics, and set up the minimum and maximum number of VM instances.Google Cloud Platform (GCP) provides auto-scaling through its Compute Engine and Kubernetes Engine. In GCP, auto-scaling is based on metrics like CPU utilization, memory usage, and network traffic. To implement auto-scaling in GCP, you would create a managed instance group, define the scaling policy based on the desired metrics, and set up the minimum and maximum number of instances.In all these platforms, auto-scaling can be set up to scale out (add more instances) or scale in (remove instances) based on the defined metrics and rules. It’s a powerful tool that allows you to optimize your cloud resources, ensuring that your applications are always ready to handle whatever comes their way, while keeping costs under control. So, why not let your IT assistant take the reins and enjoy the benefits of auto-scaling today?

Monitoring and Logging: Keeping an Eye on Your App

In the dynamic landscape of scalable web applications, monitoring and logging are not just beneficial, but absolutely indispensable. They are the eyes and ears that keep a constant vigil on your application’s health, performance, and behavior. Monitoring helps you understand how your application is performing in real-time, enabling you to identify and rectify issues promptly. It provides insights into metrics like CPU usage, memory consumption, request rates, and error rates, allowing you to make data-driven decisions to optimize your application’s performance. On the other hand, logging captures detailed information about your application’s activities, helping you diagnose issues, understand user behavior, and comply with regulatory requirements.

There are numerous tools available in the market that cater to these needs, each with its unique features. Prometheus, for instance, is a popular open-source monitoring and alerting toolkit. It uses a pull-over-HTTPS model for data collection, making it highly flexible and efficient. It also supports multi-dimensional data and has a flexible query language, PromQL, for data aggregation and analysis. Grafana, another widely-used tool, serves as a visualization front-end for Prometheus. It offers a wide range of chart types and supports multiple data sources, allowing you to create comprehensive dashboards.

In the logging realm, ELK Stack (Elasticsearch, Logstash, Kibana) is a powerful toolset. Elasticsearch is a distributed, open-source search and analytics engine that can handle large volumes of data. Logstash, a data collection and processing engine, can ingest data from a multitude of sources and transform it before sending it to Elasticsearch. Kibana, a data visualization tool, provides a user-friendly interface to search, analyze, and visualize the data stored in Elasticsearch. Other notable tools include Datadog, New Relic, and Splunk, each offering robust features for monitoring and logging.

To effectively use these tools, it’s crucial to first identify your application’s key performance indicators (KPIs) and the types of data you need to log. Then, you can configure your tools to monitor these KPIs and log the relevant data. Regularly reviewing and analyzing this data will help you maintain your application’s health and performance, ensuring it continues to serve your users effectively.

Continuous Integration and Deployment: Streamlining the Development Process

In the dynamic world of software development, efficiency and speed are paramount. This is where Continuous Integration and Continuous Deployment (CI/CD) come into play, revolutionizing the way we build, test, and deploy web applications. CI/CD is a set of practices that automate the software delivery process, enabling development teams to integrate code changes frequently, ensure the integrity of the application, and deploy updates swiftly and safely.

The core concept of CI/CD revolves around three key stages: Continuous Integration, Continuous Testing, and Continuous Deployment. Continuous Integration involves automating the process of integrating code changes from multiple contributors into a single source. This is typically done using version control systems like Git, coupled with CI tools such as Jenkins, Travis CI, or CircleCI. These tools monitor the repository for changes, automatically build and compile the code, and run initial tests to catch any integration issues early.

Next, Continuous Testing takes over. This stage involves running a suite of automated tests on the integrated code to ensure it meets the desired quality standards. Tools like JUnit, Selenium, or Postman can be used to write and run these tests. The CI/CD pipeline should be designed to fail the build if any of these tests fail, ensuring that only tested and approved code makes it to the next stage.

Finally, Continuous Deployment takes the tested code and deploys it to the production environment. This can be done using tools like Ansible, Puppet, or Docker, which automate the deployment process, ensuring consistency and minimizing human error. The deployment can be triggered manually or automatically, depending on the team’s preference and the complexity of the application.

Implementing CI/CD pipelines in scalable web applications requires careful planning and consideration of best practices. Some of these best practices include:

- Using version control systems to track changes and facilitate collaboration.

- Implementing a branching strategy, such as GitFlow or Feature Branching, to manage different stages of development.

- Automating tests to ensure code quality and catch issues early.

- Using infrastructure as code (IaC) tools, like Terraform or CloudFormation, to manage and provision resources.

- Monitoring and logging the CI/CD pipeline to identify and resolve issues quickly.

- Regularly reviewing and updating the CI/CD pipeline to ensure it remains efficient and effective.

By implementing these best practices and leveraging the right tools, teams can streamline their development process, reduce manual effort, and deliver high-quality software faster and more reliably.

Security in Scalable Web Apps: Protecting Your Application

In the dynamic landscape of scalable web applications, security is not just an afterthought, but a critical aspect that demands continuous attention. As your application grows, so do the potential vulnerabilities and attack surfaces. Here are some best practices to protect your scalable web application, focusing on securing APIs, safeguarding data, and implementing robust authentication and authorization.

Securing APIs:

APIs are the lifeblood of modern web applications, but they can also be a gateway for malicious activities if not secured properly. Implementing API security involves several steps. First, use secure communication protocols like HTTPS to encrypt data in transit. Next, employ API gateways to manage access, enforce policies, and provide centralized logging. Rate limiting and throttling can help prevent API abuse and Denial of Service (DoS) attacks. Additionally, use API keys or tokens for client identification and authentication.

Protecting Data:

Data is the most valuable asset of any web application. To protect it, start by following the principle of least privilege, ensuring that data is only accessible to those who absolutely need it. Use encryption to secure data at rest and in transit. Implement secure data storage solutions, and regularly update and patch your systems to protect against known vulnerabilities. Regularly review and audit data access logs to detect any anomalies.

Authentication and Authorization:

Implementing strong authentication and authorization mechanisms is crucial for securing user access. Use multi-factor authentication (MFA) wherever possible to add an extra layer of security. For authorization, use role-based access control (RBAC) to manage user permissions. Implement the principle of least privilege here as well, ensuring users only have the minimum permissions required to perform their tasks. Regularly review and update user roles and permissions.

In conclusion, securing scalable web applications is an ongoing process that requires a multi-layered approach. By following these best practices, you can significantly enhance the security of your application, protect your users’ data, and ensure the longevity and success of your web application.

FAQ

What is the key difference between traditional web apps and modern, scalable web apps?

How does containerization help in building scalable web apps?

What role do serverless architectures play in building scalable web apps?

How can I ensure my web app’s database remains scalable?

What are some development best practices for building scalable web apps?

- Following the single responsibility principle to keep services small and focused.

- Implementing proper error handling and retries to ensure resilience.

- Using asynchronous communication between services to improve performance.

- Writing unit tests and implementing continuous integration/continuous deployment (CI/CD) pipelines for automated testing and deployment.

- Monitoring and logging application performance to identify and address bottlenecks proactively.

How can I optimize my web app’s API for scalability?

- Using pagination and filtering to limit the amount of data returned in a single request.

- Implementing rate limiting to prevent API abuse and ensure fair resource usage.

- Caching API responses to reduce the load on backend services.

- Using API gateways to handle tasks like authentication, throttling, and request routing.

- Designing APIs with a RESTful or GraphQL approach, focusing on resource-based endpoints and efficient data transfer.

How can I ensure my web app remains highly available?

- Deploying services across multiple availability zones or regions to protect against data center failures.

- Using load balancers to distribute traffic evenly across multiple instances of a service.

- Implementing auto-scaling to automatically adjust the number of instances based on demand.

- Setting up redundant database replicas and implementing read replicas to improve read performance and ensure data durability.

- Regularly testing and simulating failure scenarios to identify and address potential weaknesses in the system.

What is the role of a service mesh in managing scalable web apps?

How can I monitor and troubleshoot my scalable web app effectively?

- Implementing distributed tracing to track requests as they flow through multiple services.

- Using logging and centralized log aggregation to collect and analyze application logs.

- Setting up application performance monitoring (APM) tools to track metrics like response times, error rates, and resource utilization.

- Configuring automated alerts to notify teams of performance issues or failures.

- Regularly reviewing and analyzing monitoring data to identify trends and proactively address potential issues.