Table of Contents

Have you ever wondered what it would take to extract valuable data from a million websites in just 24 hours? It’s not a task for the faint-hearted, but with the right web scraping techniques and a strategic approach to large-scale data extraction, it’s entirely possible. In this comprehensive guide, we’re going to delve into the fascinating world of web scraping mastery, where we’ll explore how to transform a vast expanse of the internet into actionable business insights.

Imagine this: you’re a business owner or a data-driven marketer, and you’re faced with a daunting challenge. You need to understand your market, your competitors, and your customers better. You need to make informed decisions, but the data you need is scattered across millions of websites. How do you gather this data efficiently and effectively? This is where web scraping comes into play, and it’s not just about collecting data; it’s about extracting insights that can drive your business forward.

Agree: You might be thinking, ‘A million websites in 24 hours? That’s impossible!’ But let us assure you, with the right tools, techniques, and strategies, it’s not only possible but also achievable. We’ve been there, done that, and we’re here to share our experiences and expertise with you.

Promise: By the end of this article, you’ll have a solid understanding of web scraping techniques that can handle large-scale data extraction. You’ll learn how to navigate the challenges of scraping a million websites, how to ensure the legality and ethics of your scraping activities, and most importantly, how to turn the data you collect into actionable business insights. We’ll also provide you with practical tips and real-life examples to illustrate our points.

Preview: So, buckle up as we embark on this exciting journey. We’ll start by discussing the basics of web scraping and why it’s such a powerful tool for businesses. Then, we’ll dive into the nitty-gritty of large-scale data extraction, exploring techniques like distributed scraping, rotation of IPs, and handling CAPTCHAs. We’ll also discuss the legal and ethical aspects of web scraping, ensuring that you stay on the right side of the law. Finally, we’ll show you how to analyze and interpret the data you’ve collected to gain valuable insights that can drive your business forward.

Are you ready to unlock the power of web scraping and turn the internet into your business’s most valuable asset? Let’s get started!

Unlocking the Power of Large-Scale Data Extraction for Business Growth

In the digital age, data has emerged as the new gold, and large-scale data extraction is the pan that unearths it. Imagine a vast, uncharted territory, teeming with valuable insights, waiting to be explored. This is the realm of big data, where businesses can unlock unprecedented opportunities for growth. By harnessing the power of large-scale data extraction, companies can sift through mountains of information, uncovering patterns, trends, and correlations that were previously invisible. This process, akin to a treasure hunt, involves collecting data from diverse sources, cleaning and organizing it, and then analyzing it to reveal actionable insights. These insights, when translated into strategic decisions, can drive business growth, improve customer experiences, and enhance operational efficiency. It’s like having a crystal ball that predicts market trends, customer preferences, and competitor strategies. But remember, with great power comes great responsibility. Data extraction must be done ethically, respecting privacy laws and ensuring data security. It’s a delicate balance, but when done right, large-scale data extraction can be the secret weapon that propels businesses towards unparalleled success.

Understanding Web Scraping Techniques

Web scraping, a term that might evoke images of stealthy data thieves, is actually a fundamental technique used in web development, data analysis, and even SEO. At its core, web scraping is the process of extracting data from websites, typically in an automated manner. This data can range from product prices on e-commerce sites to weather information on news portals. The applications are vast, from price comparison tools to sentiment analysis on social media posts.

Understanding web scraping techniques is crucial for anyone looking to harness the power of the web’s vast data reservoirs. One of the most basic techniques is HTML parsing. HTML, the language of the web, structures webpages. By parsing HTML, we can extract the data we need. This can be done using libraries like Beautiful Soup in Python.

CSS selectors are another powerful tool in a web scraper’s arsenal. They allow us to target specific elements on a webpage, much like how we use CSS to style webpages. For instance, using a CSS selector, we can extract all the article titles from a news website.

However, web scraping isn’t always about diving into the HTML. Many websites provide APIs, which are essentially interfaces that allow us to access their data in a structured, often more efficient manner. Using APIs, we can often bypass the need for HTML parsing and CSS selectors.

But with great power comes great responsibility. Web scraping, when done unethically, can lead to data theft, website crashes, and even legal issues. It’s crucial to always respect a website’s terms of service and robots.txt rules. Scrape only the data you need, and never overload a server with too many requests. After all, we’re guests on the web, and we should behave as such.

Scaling Up: Strategies for Large-Scale Data Extraction

Scaling up web scraping to handle a large number of websites is a complex task that requires careful planning and the implementation of robust strategies. One of the primary challenges is managing the sheer volume of data that needs to be extracted and processed. This is where distributed systems come into play.

Distributed systems allow you to break down your scraping task into smaller, manageable parts that can be processed simultaneously by multiple machines. This not only speeds up the extraction process but also ensures that if one machine fails, the others can continue working, providing a level of fault tolerance. Tools like Apache Hadoop and Spark are excellent for this purpose, as they provide a distributed computing framework that can handle large datasets.

However, with great power comes great responsibility. Distributed systems can quickly overwhelm a website’s server if not managed properly. This is where load balancing comes in. Load balancing distributes the incoming network traffic across multiple servers, ensuring that no single server is overwhelmed. This can be achieved using tools like Nginx or HAProxy.

Efficient data storage is another crucial aspect of large-scale data extraction. Storing data in a structured format like JSON or CSV can make it easier to parse and analyze later. For very large datasets, consider using a database system like MongoDB or Cassandra, which are designed to handle large amounts of data efficiently.

Finally, the role of cloud computing in facilitating large-scale data extraction cannot be overstated. Cloud computing provides on-demand computing resources, allowing you to scale your operations up or down as needed. Services like Amazon Web Services (AWS) and Google Cloud Platform (GCP) offer a range of tools that can help you manage and process large datasets. They also provide robust security features to protect your data.

In conclusion, scaling up web scraping to handle a large number of websites requires a multi-pronged approach. It involves using distributed systems for parallel processing, load balancing to manage traffic, efficient data storage solutions, and leveraging the power of cloud computing. Each of these strategies plays a crucial role in ensuring that your large-scale data extraction project runs smoothly and efficiently.

Overcoming Challenges in Large-Scale Web Scraping

Embarking on a large-scale web scraping project is akin to navigating a vast, uncharted territory, filled with both treasures of data and formidable challenges. One of the most daunting obstacles is the ever-vigilant website blocking. Websites, especially those with sensitive data, employ sophisticated measures to deter unwanted scraping. They may block IP addresses that exhibit scraping behavior, or even use CAPTCHA tests to ensure human interaction. To circumvent this, consider implementing IP rotation. Services like AWS or Cloudflare offer IP pools that can be rotated, making it harder for websites to detect and block your scraping activities. Additionally, respecting the website’s `robots.txt` file and rate limits can help maintain a harmonious relationship with the target site.

Data quality issues are another beast to tame in large-scale web scraping. As the scale increases, so does the likelihood of encountering inconsistent, incomplete, or incorrect data. To ensure data quality, implement rigorous data validation checks. This could involve comparing scraped data with known values, or using machine learning algorithms to identify anomalies. Another approach is to use structured data formats like JSON or XML, which provide a clear schema for data organization.

Lastly, let’s not forget the challenge of handling dynamic websites. Modern websites often rely on JavaScript to load content, making it invisible to traditional web scraping methods. To overcome this, consider using headless browsers like Puppeteer or Selenium. These tools can execute JavaScript and render the dynamic content, allowing you to scrape the full webpage.

In conclusion, large-scale web scraping is a complex task that requires a multi-pronged approach. By respecting website rules, rotating IPs, validating data, and handling dynamic content, we can overcome these challenges and extract valuable data from the vast expanse of the web.

Data Extraction Tools and Libraries

In the realm of data extraction, web scraping has emerged as a powerful tool, enabling us to harvest valuable information from the vast expanse of the World Wide Web. A plethora of tools and libraries have been developed to facilitate this process, each with its unique features and use cases. Let’s delve into some of the most popular ones. Beautiful Soup is a Python library that makes it easy to scrape information from web pages. It creates a parse tree from page source code that can be used to extract data in a hierarchical and readable manner. Beautiful Soup is lightweight, easy to use, and well-documented, making it an excellent choice for beginners. It’s particularly useful when you need to extract data that’s already in a structured format, like HTML tables. Here’s a simple example: “`python from bs4 import BeautifulSoup import requests response = requests.get(‘https://example.com’) soup = BeautifulSoup(response.text, ‘html.parser’) print(soup.find(‘title’)) “` Scrapy is a more robust framework for building web crawlers. It’s written in Python and follows a modular approach, allowing you to create spiders (crawlers) that can navigate websites and extract data. Scrapy is efficient, with built-in support for handling requests concurrently. It also has a rich ecosystem of extensions for tasks like rotating proxies, handling cookies, and more. However, it has a steeper learning curve compared to Beautiful Soup. “`python import scrapy class ExampleSpider(scrapy.Spider): name = ‘example’ start_urls = [‘https://example.com’] def parse(self, response): self.log(‘Visited %s’ % response.url) for link in response.css(‘a::attr(href)’).getall(): yield scrapy.Request(link, callback=self.parse) “` Selenium is a web testing library that can also be used for web scraping. It automates web browsers, allowing it to interact with dynamic websites that rely on JavaScript to load data. This makes it a powerful tool for scraping modern web applications. However, it’s slower than other tools due to the overhead of browser automation, and it requires more resources to run. Here’s a basic example: “`python from selenium import webdriver driver = webdriver.Chrome() driver.get(‘https://example.com’) print(driver.title) driver.quit() “` Each of these tools has its own strengths and weaknesses, and the choice between them depends on the specific needs of your project. Beautiful Soup is great for simple, static websites, Scrapy is ideal for large-scale crawling, and Selenium is necessary for dynamic, JavaScript-heavy sites.

Data Cleaning and Transformation

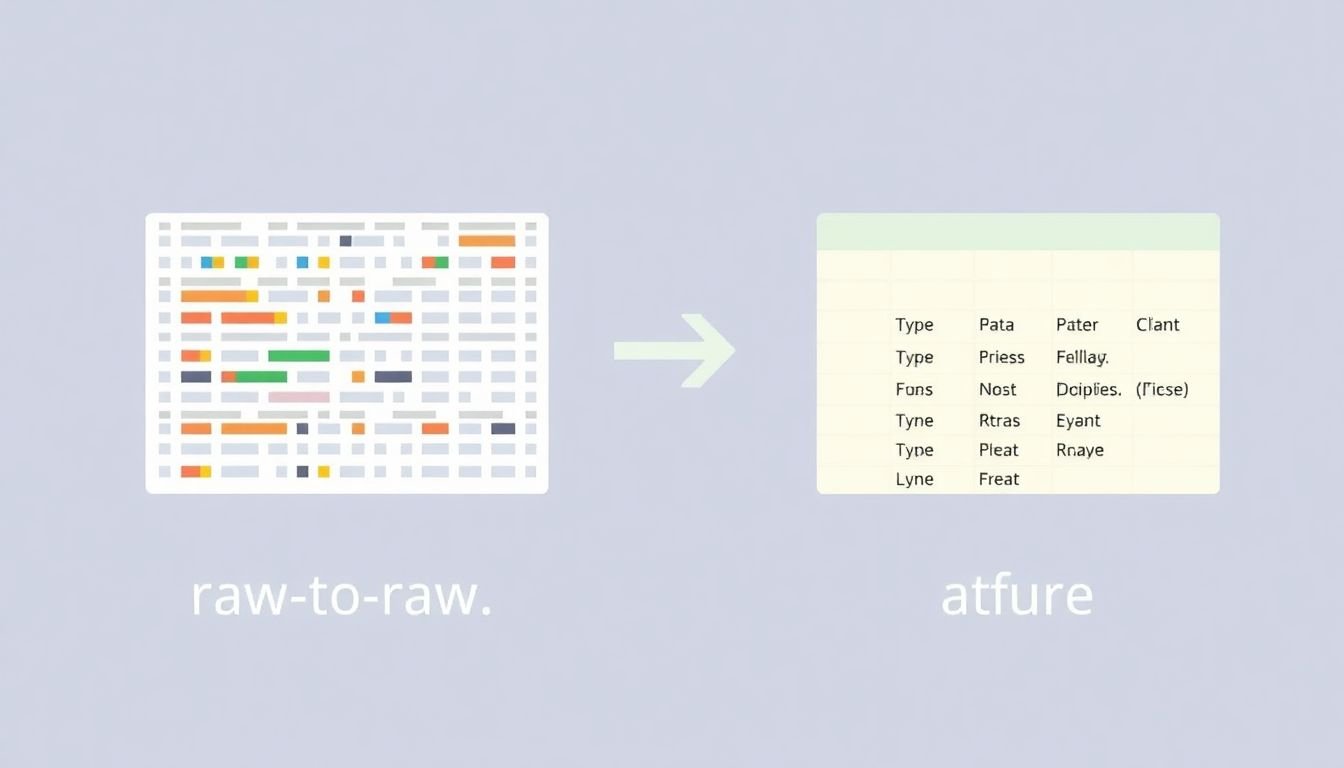

Data cleaning and transformation, often the unsung heroes of the data analysis process, play an indispensable role in preparing raw, scraped data for meaningful analysis. Raw data, fresh off the digital vine, is often a messy, unstructured beast, riddled with inconsistencies, inaccuracies, and gaps. It’s like trying to cook a gourmet meal with ingredients straight from the garden

- it requires some serious prep work.First, let’s talk about data normalization. Imagine you’re analyzing customer data from different sources, each with its own way of representing ages

- some in years, others in months, and a few even in days. A 30-year-old and a 30-month-old are not the same, but without normalization, your analysis might treat them as such. Normalization ensures all data is on the same scale, making comparisons fair and accurate. It’s like converting all your ingredients to the same unit of measurement before you start cooking.Next, we have the dreaded missing values, the data equivalent of a black hole, sucking in any analysis that dares to get too close. They can skew results, create biases, and generally make your analysis less reliable. There are several techniques to handle missing values, depending on the context. You might impute them with mean, median, or mode values, or use more complex methods like regression imputation. Sometimes, the best course of action is to simply remove the missing values, but this should be done judiciously to avoid losing valuable data.Lastly, data enrichment is like adding those secret herbs and spices to your dish. It involves supplementing your data with additional information to provide more context and depth. For instance, if you’re analyzing sales data, enriching it with weather data could provide valuable insights into how sales are affected by weather conditions. Enrichment can help you uncover hidden patterns and trends, making your analysis more robust and insightful.In essence, data cleaning and transformation are not just about making data look neat and tidy. They are about turning raw, unstructured data into a valuable, analysis-ready resource. It’s the difference between a heap of uncooked ingredients and a delicious, well-balanced meal. So, the next time you’re tempted to dive straight into analysis, remember the importance of these preparatory steps. Your analysis will thank you for it.

Extracting Actionable Business Insights

Extracting actionable business insights from scraped data is a powerful process that combines web scraping, data visualization, machine learning, and natural language processing. Let’s delve into this process with a real-world example: analyzing e-commerce product reviews to improve sales strategies.

Firstly, we scrape review data from e-commerce websites using tools like Beautiful Soup or Scrapy. We target review text, ratings, and timestamps. Our dataset now resembles a collection of customer opinions, but it’s unstructured and noisy.

Next, we employ natural language processing (NLP) techniques to clean and structure this data. We use libraries like NLTK or SpaCy to tokenize text, remove stop words, and perform lemmatization. We also extract sentiment from reviews using pre-trained models like VaderSentiment or transformers like BERT. Now, our data is clean, structured, and ready for analysis.

Data visualization is crucial for identifying trends and patterns. We use libraries like Matplotlib and Seaborn to create visualizations. For instance, a bar chart can display the distribution of star ratings, revealing which products are most loved or hated. A line chart can show how sentiment changes over time, indicating when a product’s popularity peaks or plummets.

Machine learning can uncover deeper insights. We can train a topic modeling algorithm like Latent Dirichlet Allocation (LDA) to identify common themes in reviews. This helps us understand what customers love or dislike about our products. We can also use clustering algorithms to segment customers based on their review behavior, enabling targeted marketing strategies.

Here’s an example of an actionable insight: ‘Product X has a high negative sentiment score (0.3) in the ‘battery life’ topic, with a significant increase in negative reviews over the past month.’ This insight suggests that the battery life of Product X is deteriorating, warranting immediate investigation and potential recall.

In conclusion, extracting actionable business insights from scraped data is a multi-step process that transforms unstructured data into valuable, data-driven decisions. By combining web scraping, data visualization, machine learning, and NLP, we can gain a competitive edge in today’s data-driven business landscape.

Ethical Web Scraping and Legal Considerations

Web scraping, the automated extraction of data from websites, has become an invaluable tool for research, data analysis, and even business intelligence. However, it’s not without its ethical and legal considerations. Let’s delve into these aspects to ensure we’re scraping responsibly.

Firstly, it’s crucial to respect the website’s terms of service. These rules are there to protect the site’s resources and user data. Scraping in violation of these terms can lead to your IP being blocked or even legal action. Always check the ‘robots.txt’ file, a standard way for websites to communicate what they allow or disallow in terms of web scraping.

Ethically, web scraping should be done with respect for the website and its users. This means not overloading the server with too many requests, which could slow down or even crash the site. It also means not scraping sensitive user data without explicit permission. Remember, just because data is publicly available doesn’t mean it’s ethically sound to scrape it.

Legally, data privacy laws like GDPR in Europe and CCPA in California have significant implications for web scraping. These laws protect user data and can impose hefty fines if violated. It’s essential to understand these laws and ensure that any data scraped is done so in compliance with them.

Copyright issues are another legal consideration. Scraping content that is protected by copyright can lead to infringement claims. To avoid this, ensure that the data you’re scraping is not protected by copyright or that you have permission to use it.

Here are some guidelines for ethical web scraping:

- Respect the website’s terms of service and ‘robots.txt’ rules.

- Don’t overload the server with too many requests.

- Don’t scrape sensitive user data without explicit permission.

- Ensure compliance with data privacy laws.

- Respect copyright laws and only scrape data that’s not protected or with proper permission.

By following these guidelines, we can ensure that web scraping remains a useful tool that benefits society without causing harm.

Monitoring and Maintaining Web Scraping Projects

Web scraping, a powerful tool for extracting data from websites, is only as useful as its ability to consistently deliver accurate and up-to-date information. This is where monitoring and maintaining web scraping projects come into play, ensuring their long-term success and reliability.

Firstly, it’s crucial to monitor websites for changes. Websites are dynamic entities, constantly evolving with updates, redesigns, or even complete overhauls. A scraper that worked perfectly yesterday might fail today due to these changes. To tackle this, implement strategies like periodic testing of scrapers, using website change detection tools, or setting up alerts for website updates.

Broken links are another common issue that can disrupt the smooth functioning of a web scraping project. They can occur due to website changes, typos, or even server issues. Regularly checking and handling broken links is essential. You can use tools like link checkers or write scripts to automatically detect and fix broken links.

Updating scrapers as needed is another critical aspect of maintenance. As websites change, your scrapers might need to be updated to adapt to new structures or data formats. This could involve modifying CSS selectors, adjusting regular expressions, or even rewriting entire scraping logic.

Here’s a simple step-by-step guide to help you maintain your web scraping projects:

- Regularly test your scrapers to ensure they’re still working as expected.

- Use website change detection tools or set up alerts for updates.

- Implement broken link detection and handling mechanisms.

- Update scrapers as needed to adapt to website changes.

By following these strategies and maintaining a proactive approach, you can ensure that your web scraping projects remain robust, reliable, and long-lived.

Case Studies: Large-Scale Web Scraping in Action

Large-scale web scraping, when executed responsibly and ethically, can unlock a treasure trove of insights for businesses. Let’s delve into two compelling case studies that illustrate the power and potential of this data extraction technique.

Case Study 1: Retail Price Intelligence with Webhose.io Webhose.io, a leading web data extraction platform, undertook a large-scale web scraping project for a major retail client. The client aimed to monitor competitor pricing and product availability in real-time to optimize their own pricing strategy. The project faced several challenges, including:

- Handling dynamic websites that frequently change their structure and content.

- Dealing with CAPTCHAs and anti-scraping measures.

- Extracting data at scale without overwhelming target servers.

Webhose.io implemented the following solutions:

- Developed a robust and adaptable scraping engine that could handle website changes.

- Employed advanced machine learning techniques to bypass CAPTCHAs and detect anti-scraping measures.

- Implemented a rotating proxy network to distribute scraping requests and avoid server overload.

The actionable insights extracted from this project allowed the retail client to:

- Identify pricing trends and optimize their own pricing strategy.

- Monitor competitor product offerings and adjust their inventory accordingly.

- Gain a competitive edge by making data-driven decisions based on real-time market intelligence.

Case Study 2: Sentiment Analysis with Meltwater Meltwater, a global leader in media intelligence, used large-scale web scraping to gather data for sentiment analysis. Their client, a multinational corporation, wanted to monitor global public sentiment towards their brand. The project’s challenges included:

- Scraping data from diverse sources in multiple languages.

- Handling the vast volume of data generated daily.

- Ensuring the accuracy and relevance of the extracted data.

Meltwater’s solutions comprised:

- Developing a multilingual scraping engine that could handle diverse data sources.

- Implementing a big data pipeline to process and store the vast amounts of data generated.

- Employing machine learning algorithms to filter and classify the extracted data, ensuring its accuracy and relevance.

The insights gained from this project enabled the multinational corporation to:

- Monitor and analyze global public sentiment towards their brand in real-time.

- Identify trends and patterns in public opinion that could inform their marketing and communication strategies.

- Proactively manage their brand reputation by addressing negative sentiment and capitalizing on positive sentiment.

These case studies demonstrate the transformative power of large-scale web scraping. By overcoming significant challenges and extracting actionable insights, these projects have driven business growth and competitive advantage for the companies involved.

FAQ

What is web scraping and why is it useful for extracting large-scale data?

How can one scrape data from 1 million websites in 24 hours?

- Use multiple IP addresses to avoid being blocked by websites.

- Implement a rotating proxy service to manage these IP addresses.

- Leverage asynchronous scraping to process multiple websites simultaneously.

- Prioritize websites based on their relevance and expected data yield.

- Use a load balancer to distribute the workload across multiple servers.

- Regularly monitor and adjust your scraping strategy to avoid overloading websites or violating their terms of service.

What are some advanced web scraping techniques for large-scale data extraction?

- **Concurrent Scraping**: Use multiple threads or processes to scrape multiple websites simultaneously.

- **Distributed Scraping**: Distribute the workload across a cluster of machines to increase scraping speed and capacity.

- **API Scraping**: Some websites provide APIs that can be used to extract data more efficiently and legally than traditional web scraping.

- **Machine Learning for Scraping**: Use machine learning algorithms to identify patterns in website structures and adapt scraping strategies accordingly.

- **Web Crawling**: Implement a web crawler to discover and extract data from websites in a systematic manner.

How can one ensure the legality and ethics of large-scale web scraping?

- **Respect Robots.txt**: Websites use robots.txt files to specify which parts of their site can be scraped. Always respect these rules.

- **Avoid Overloading Servers**: Be mindful of the load your scraping activity places on websites’ servers. Avoid sending too many requests in a short period.

- **Check Terms of Service**: Always review the terms of service of the websites you plan to scrape. Some websites explicitly prohibit web scraping.

- **Use Data Responsibly**: Once you’ve extracted data, use it responsibly. Respect privacy laws and avoid publishing sensitive information.

- **Consider Scraping Alternatives**: If a website provides an API or a data dump, consider using these legal and ethical alternatives to web scraping.

How can one extract actionable business insights from large-scale web scraping?

- **Data Cleaning**: Clean and structure the extracted data to make it analysis-ready.

- **Data Analysis**: Use statistical analysis and data visualization tools to identify trends, patterns, and outliers in the data.

- **Sentiment Analysis**: For textual data, perform sentiment analysis to understand the sentiment behind the text.

- **Competitor Analysis**: Analyze competitors’ websites to understand their strategies, pricing, and product offerings.

- **Market Trends**: Identify market trends by analyzing data from multiple sources.

- **Predictive Modeling**: Use machine learning algorithms to make predictions based on the extracted data, such as forecasting sales or customer behavior.

How can one handle dynamic websites and JavaScript-rendered content in web scraping?

- **Headless Browsers**: Use headless browsers like Selenium or Puppeteer to render JavaScript and extract the resulting HTML.

- **APIs**: If available, use APIs to extract data instead of scraping the website directly.

- **Web Scraping Libraries**: Some web scraping libraries, like Scrapy with Splash or Playwright, support JavaScript rendering.

- **Wait for Elements**: Implement a delay or use explicit waits to allow JavaScript to fully render the content before scraping.

- **Rotate User-Agents**: Some websites block scrapers based on user-agent strings. Rotating user-agents can help bypass these blocks.